AI vision, such as image processing with artificial intelligence, is a heavily debated subject. However, the promise of innovative, new technology has not yet materialized in many areas, such as industrial applications. Therefore, as of yet, there are no long-term empirical values for AI vision.

Image Credit: IDS Imaging Development Systems GmbH

Even though there are several embedded vision systems on the market that make it possible to use AI in industrial settings, many facility managers are still hesitant to upgrade their applications and buy one of these platforms.

In situations where rule-based image processing has run out of options or has generally failed to find a solution, AI has already shown creative possibilities. Therefore, the question remains as to what is stopping the widespread uptake of this technology.

One of the main obstacles to the technology's further development is user-friendliness. Thus, one requirement is that anyone should be able to create their own AI-based image processing applications, even without extensive training in artificial intelligence or the required application programming.

While AI can speed up a variety of work processes and reduce sources of error, edge computing also makes it possible to do away with pricey industrial computers and the intricate infrastructure required for high-speed image data transmission.

New and Different

However, AI and machine learning (ML) operate very differently from traditional, rule-based image processing. This affects how image-processing tasks are approached and handled.

The quality of the results is now ascertained by the learning process of the neural networks used with suitable image data, as opposed to the manual development of program code by an expert in image processing.

In other words, the object features needed for inspection are no longer predetermined by predefined rules, but rather, the AI must be trained to recognize them. Additionally, the likelihood that the AI/ML algorithms will be able to identify the features that are particularly important later in operation increases with the diversity of the training data.

When combined with enough knowledge and experience, what appears to be straightforward can also result in the achievement of the desired outcome.

Errors will also happen in this application without a trained eye for the right image data. As a result, working with machine learning methods requires different key competencies than working with rule-based image processing.

However, not everyone has the time or resources to dive into the topic from the ground up to learn a set of new critical skills for using machine learning techniques.

The main problem with new technology is that it becomes difficult to believe in and place trust in such a system if they produce excellent results with little effort but the decisions can't be checked by simply reviewing the code.

Complex and Misunderstood

Logically, learning how AI vision functions seems imperative. Yet, without understandable, simple explanations, it is challenging to assess the results.

Acquiring confidence in a new technology typically requires the development of skills and experience, which can take a long time to acquire before one is fully aware of what the technology can do, how it operates, how to use it, and how to manage it effectively.

The fact that the AI vision competes with an established system for which the right environmental conditions have been created in recent years through the application of knowledge, training, documentation, software, hardware, and development environments only serves to complicate matters further.

Contrarily, AI still seems to be very undeveloped and raw, and despite the well-known advantages and the high levels of accuracy that can be attained with AI vision, it is typically challenging to spot mistakes.

The other side of the coin is that the algorithms’ development is hampered by a lack of clear understanding of their inner workings or by unexpected results.

(Not) a Black Box

A common misconception about neural networks is that they make decisions in a mysterious, opaque black box.

Although DL models are undoubtedly complex, they are not black boxes. In fact, it would be more accurate to call them glass boxes, because we can literally look inside and see what each component is doing.

The black box metaphor in machine learning

While the complex interactions of neural networks’ artificial neurons may initially be difficult for humans to comprehend, inference decisions made by these systems are still the obvious outcome of a mathematical system; therefore, they can be replicated and analyzed easily.

The proper tools to support proper evaluation are still lacking, however. But, there is plenty of room for improvement in this area of AI. This also demonstrates how well the various AI systems on the market can help users in their endeavors.

Software Makes AI Explainable

Due to these factors, IDS Imaging Development GmbH is working assiduously to develop these precise tools.

One of the outcomes is already in place: the IDS NXT inference camera system. The overall quality of a trained neural network can be established and understood statistically through the use of the so-called confusion matrix.

After the network has been trained, its validity can be checked using a predetermined set of images with known results. In a table, a comparison between the actual results and the expected results based on inference is displayed.

This makes it clear how frequently each trained object class correctly or incorrectly identified the test objects. A general assessment of the trained algorithm’s quality can then be made based on the hit rates produced.

The matrix also makes it clear in which instances the recognition accuracy might still be suitable for use in a useful manner. It does not, however, provide a detailed justification for this result.

Figure 1. This confusion matrix of a CNN classifying screws shows where the identification quality can be improved by retraining with more images. Image Credit: IDS Imaging Development Systems GmbH.

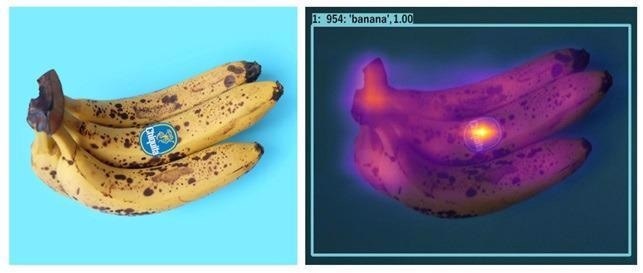

The attention map comes in handy at this point, showing a sort heat map that highlights the regions or image contents that the neural network concentrates on and uses to guide decisions.

IDS NXT lighthouse’s training process involves activating the creation of this visualization form with the decision paths created during training, which enables the network to produce a heat map from each image as the analysis is running.

As a result, difficult-to-understand decisions made by AI are made clearer, making it easier for neural networks in industrial settings to be accepted.

It can also be used to identify and stop data biases that could lead a neural network to draw the wrong conclusions while making inferences (see figure “attention maps”).

Poor input results in poor output. An AI system needs good data to learn “correct behavior” to identify patterns and make predictions.

The system will incorporate and use biases if an AI is built in a lab environment using data that is not necessarily reflective of subsequent applications, or worse, if biases are imposed by the patterns in the data.

Figure 2. This heat map shows a classic data bias. The heat map visualizes a high attention on the Chiquita label of the banana and thus a good example of a data bias. Through false or under representative training images of bananas, the CNN used has obviously learned that this Chiquita label always suggests a banana. Image Credit: IDS Imaging Development Systems GmbH.

Software tools enable users to track the behavior and outcomes of AI vision, directly link these to flaws in the training data set, and make targeted adjustments as needed. The fact that AI is essentially just statistics and mathematics makes it easier for everyone to understand and explain.

Although understanding the mathematics and following it in the algorithm is rather challenging, tools like confusion matrices and heat maps help make decisions and the justifications for them visible and, therefore, understandable.

It is Just Getting Started

AI vision has the potential to improve a variety of vision processes when used properly. Hardware and software, however, is not enough to convince the universal industry to accept AI. Manufacturers are frequently faced with the challenge of sharing their knowledge and highlight user-friendliness as well as internal systems that support users.

AI still has a long way to go before it can catch up to best practices of rule-based imaging applications, which were developed over years of application to develop a devoted customer base with a ton of documentation, knowledge transfer, and software tools. Yet, it is great that these AI systems and auxiliary tools are already under construction.

Additionally, standards and certifications are being developed to broaden acceptance and make AI explanations simple enough to present to large groups of people.

With IDS NXT, an integrated AI system is now available that can be quickly and easily applied as an industrial tool by any user group with a sophisticated and user-friendly software environment - even without comprehensive knowledge of image processing, machine learning or application programming.

This information has been sourced, reviewed and adapted from materials provided by IDS Imaging Development Systems GmbH.

For more information on this source, please visit IDS Imaging Development Systems GmbH.